1. Introduction

In this tutorial, we’ll learn how to install and use the OpenCV computer vision library and apply it to real-time face detection.

2. Installation

To use the OpenCV library in our project, we need to add the opencv Maven dependency to our pom.xml:

<dependency>

<groupId>org.openpnp</groupId>

<artifactId>opencv</artifactId>

<version>4.9.0-0</version>

</dependency>

For Gradle users, we’ll need to add the dependency to our build.gradle file:

compile group: 'org.openpnp', name: 'opencv', version: '3.4.2-0'

After adding the library to our dependencies, we can use the features provided by OpenCV.

3. Using the Library

To start using OpenCV, we need to initialize the library, which we can do in our main method:

OpenCV.loadShared();

OpenCV is a class that holds methods related to loading native packages required by the OpenCV library for various platforms and architectures.

It’s worth noting that the documentation does things slightly differently:

System.loadLibrary(Core.NATIVE_LIBRARY_NAME)

Both of those method calls will actually load the required native libraries.

The difference here is that the latter requires the native libraries to be installed. The former, however, can install the libraries to a temporary folder if they are not available on a given machine. Due to this difference, the loadShared method is usually the best way to go.

Alternatively, we can download and compile the library according to the official documentation and load the library before using OpenCV-related classes:

System.load("/opencv/build/lib/libopencv_java4100.dylib");

Now that we’ve initialized the library, let’s see what we can do with it.

4. Loading Images

To start, let’s load the sample image from the disk using OpenCV:

public static Mat loadImage(String imagePath) {

return new Imgcodecs(imagePath);

}

This method will load the given image as a Mat object, which is a matrix representation.

To save the previously loaded image, we can use the imwrite() method of the Imgcodecs class:

public static void saveImage(Mat imageMatrix, String targetPath) {

Imgcodecs.imwrite(targetPath, imageMatrix);

}

5. Haar Cascade Classifier

Before diving into facial recognition, let’s understand the core concepts that make this possible.

Simply put, a classifier is a program that seeks to place a new observation into a group dependent on experience. Cascading classifiers seek to do this using a concatenation of several classifiers. Each subsequent classifier uses the output from the previous as additional information, improving the classification significantly.

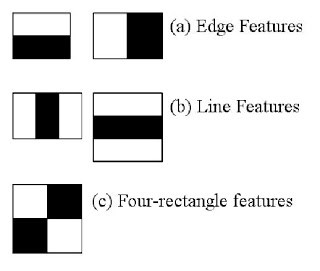

5.1. Haar Features

In our case, we’ll use a Haar-feature-based cascade classifier for face detection in OpenCV.

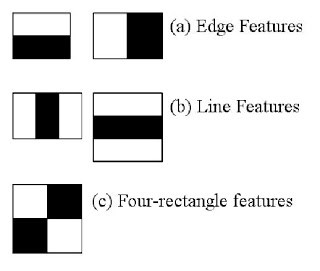

Haar features are filters that are used to detect edges and lines on the image. The filters are seen as squares with black and white colors:

These filters are applied multiple times to an image, pixel by pixel, and the result is collected as a single value. This value is the difference between the sum of pixels under the black square and the sum of pixels under the white square.

6. Face Detection

Generally, the cascade classifier needs to be pre-trained to detect anything at all.

Since the training process can be long and would require an extensive dataset, we’re going to use one of the pre-trained models offered by OpenCV. We’ll place this XML file in our resources folder for easy access.

Let’s step through the process of detecting a face:

We’ll attempt to detect the face by outlining it with a red rectangle.

To get started, we need to load the image in Mat format from our source path:

Mat loadedImage = loadImage(sourceImagePath);

Then, we’ll declare a MatOfRect object to store the faces we find:

MatOfRect facesDetected = new MatOfRect();

Next, we need to initialize the CascadeClassifier to do the recognition:

CascadeClassifier cascadeClassifier = new CascadeClassifier();

int minFaceSize = Math.round(loadedImage.rows() * 0.1f);

String filename = FaceDetection.class.getClassLoader().getResource("haarcascades/haarcascade_frontalface_alt.xml").getFile();

cascadeClassifier.load(filename);

cascadeClassifier.detectMultiScale(loadedImage,

facesDetected,

1.1,

3,

Objdetect.CASCADE_SCALE_IMAGE,

new Size(minFaceSize, minFaceSize),

new Size()

);

Above, parameter 1.1 denotes the scale factor we want to use, specifying how much the image size is reduced at each image scale. The following parameter, 3, is minNeighbors. This is the number of neighbors a candidate rectangle should have in order to retain it.

Finally, we’ll loop through the faces and save the result:

Rect[] facesArray = facesDetected.toArray();

for(Rect face : facesArray) {

Imgproc.rectangle(loadedImage, face.tl(), face.br(), new Scalar(0, 0, 255), 10);

}

saveImage(loadedImage, targetImagePath);

When we input our source image, we should now receive the output image with all the faces marked with a red rectangle.

This briefly describes the content of our detectFace() method. Let’s use this to test that everything is working correctly:

public static void main(String[] args) {

// Load the native library.

System.load("/opencv/build/lib/libopencv_java4100.dylib");

detectFace(Paths.get("portrait.jpg"),"./processed.jpg");

}

In short, we’ll load an image and call the detectFace() method with the input file path and the output file path. As a result, we’ll get a file named processed.jpg that will contain a red rectangle around the face of the person depicted in the picture:

7. Accessing the Camera Using OpenCV

So far, we’ve seen how to perform face detection on loaded images. But most of the time, we want to do it in real time, and to do that, we need to access the camera.

However, to show an image from a camera, we need a few things besides the obvious—a camera. To show the images, we’ll use JavaFX.

Since we’ll be using an ImageView to display the pictures our camera has taken, we need a way to translate an OpenCV Mat to a JavaFX Image:

public Image mat2Img(Mat mat) {

MatOfByte bytes = new MatOfByte();

Imgcodecs.imencode("img", mat, bytes);

InputStream inputStream = new ByteArrayInputStream(bytes.toArray());

return new Image(inputStream);

}

Here, we convert our Mat into bytes and then convert the bytes into an Image object.

We’ll start by streaming the camera view to a JavaFX Stage.

Now, let’s initialize the library using the loadShared method:

OpenCV.loadShared();

Next, we’ll create the stage with a VideoCapture and an ImageView to display the Image:

VideoCapture capture = new VideoCapture(0);

ImageView imageView = new ImageView();

HBox hbox = new HBox(imageView);

Scene scene = new Scene(hbox);

stage.setScene(scene);

stage.show();

Here, 0 is the ID of the camera we want to use. We also need to create an AnimationTimer to handle setting the image:

new AnimationTimer() {

@Override public void handle(long l) {

imageView.setImage(getCapture());

}

}.start();

Finally, our getCapture method handles converting the Mat to an Image:

public Image getCapture() {

Mat mat = new Mat();

capture.read(mat);

return mat2Img(mat);

}

The application should now create a window and then live-stream the view from the camera to the imageView window.

8. Real-Time Face Detection

Finally, we can connect all the dots to create an application that detects a face in real-time.

The code from the previous section is responsible for grabbing the image from the camera and displaying it to the user. Now, all we have to do is process the grabbed images before showing them on screen by using our CascadeClassifier class.

Let’s modify our getCapture method also to perform face detection:

public Image getCaptureWithFaceDetection() {

Mat mat = new Mat();

capture.read(mat);

Mat haarClassifiedImg = detectFace(mat);

return mat2Img(haarClassifiedImg);

}

Now, if we run our application, the face should be marked with a red rectangle.

We can also see a disadvantage of the cascade classifiers. If we turn our face too much in any direction, then the red rectangle disappears. This is because we’ve used a specific classifier that was trained only to detect the front of the face.

9. Summary

In this tutorial, we learned how to use OpenCV in Java.

We used a pre-trained cascade classifier to detect faces on the images. With the help of JavaFX, we made the classifiers detect faces in real-time with images from a camera.

The code backing this article is available on GitHub. Once you're

logged in as a Baeldung Pro Member, start learning and coding on the project.