1. Introduction

In this tutorial, we’ll learn how to configure a Dead Letter Queue mechanism for Apache Kafka using Spring.

2. Dead Letter Queues

A Dead Letter Queue (DLQ) is used to store messages that cannot be correctly processed due to various reasons, for example, intermittent system failures, invalid message schema, or corrupted content. These messages can be later removed from the DLQ for analysis or reprocessing.

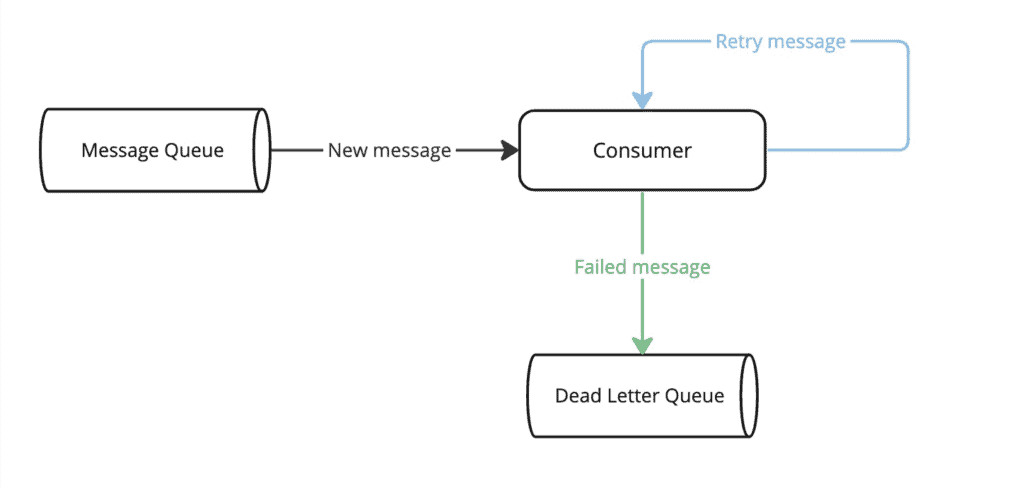

The following figure presents a simplified flow of the DLQ mechanism:

Using a DLQ is generally a good idea, but there are scenarios when it should be avoided. For example, it’s not recommended to use a DLQ for a queue where the exact order of messages is important, as reprocessing a DLQ message breaks the order of the messages on arrival.

3. Dead Letter Queues in Spring Kafka

The equivalent of the DLQ concept in Spring Kafka is the Dead Letter Topic (DLT). In the following sections, we’ll see how the DLT mechanism works for a simple payment system.

3.1. Model Class

Let’s start with the model class:

public class Payment {

private String reference;

private BigDecimal amount;

private Currency currency;

// standard getters and setters

}

Let’s also implement a utility method for creating events:

static Payment createPayment(String reference) {

Payment payment = new Payment();

payment.setAmount(BigDecimal.valueOf(71));

payment.setCurrency(Currency.getInstance("GBP"));

payment.setReference(reference);

return payment;

}

3.2. Setup

Next, let’s add the required spring-kafka and jackson-databind dependencies:

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>3.1.2</version> </dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.14.3</version>

</dependency>

We can now create the ConsumerFactory and ConcurrentKafkaListenerContainerFactory beans:

@Bean

public ConsumerFactory<String, Payment> consumerFactory() {

Map<String, Object> config = new HashMap<>();

config.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

return new DefaultKafkaConsumerFactory<>(

config, new StringDeserializer(), new JsonDeserializer<>(Payment.class));

}

@Bean

public ConcurrentKafkaListenerContainerFactory<String, Payment> containerFactory() {

ConcurrentKafkaListenerContainerFactory<String, Payment> factory =

new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

return factory;

}

Finally, let’s implement the consumer for the main topic:

@KafkaListener(topics = { "payments" }, groupId = "payments")

public void handlePayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on main topic={}, payload={}", topic, payment);

}

Before moving on to the DLT examples, we’ll discuss the retry configuration.

3.3. Turning Off Retries

In real-life projects, it’s common to retry processing an event in case of errors before sending it to DLT. This can be easily achieved using the non-blocking retries mechanism provided by Spring Kafka.

In this article, however, we’ll turn off the retries to highlight the DLT mechanism. An event will be published directly to the DLT when the consumer for the main topic fails to process it.

First, we need to define the producerFactory and the retryableTopicKafkaTemplate beans:

@Bean

public ProducerFactory<String, Payment> producerFactory() {

Map<String, Object> config = new HashMap<>();

config.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

return new DefaultKafkaProducerFactory<>(

config, new StringSerializer(), new JsonSerializer<>());

}

@Bean

public KafkaTemplate<String, Payment> retryableTopicKafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

Now we can define the consumer for the main topic without additional retries, as described earlier:

@RetryableTopic(attempts = "1", kafkaTemplate = "retryableTopicKafkaTemplate")

@KafkaListener(topics = { "payments"}, groupId = "payments")

public void handlePayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on main topic={}, payload={}", topic, payment);

}

The attempts property in the @RetryableTopic annotation represents the number of attempts tried before sending the message to the DLT.

4. Configuring Dead Letter Topic

We’re now ready to implement the DLT consumer:

@DltHandler

public void handleDltPayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on dlt topic={}, payload={}", topic, payment);

}

The method annotated with the @DltHandler annotation must be placed in the same class as the @KafkaListener annotated method.

In the following sections, we’ll explore the three DLT configurations available in Spring Kafka. We’ll use a dedicated topic and consumer for each strategy to make each example easy to follow individually.

4.1. DLT With Fail on Error

Using the FAIL_ON_ERROR strategy we can configure the DLT consumer to end the execution without retrying if the DLT processing fails:

@RetryableTopic(

attempts = "1",

kafkaTemplate = "retryableTopicKafkaTemplate",

dltStrategy = DltStrategy.FAIL_ON_ERROR)

@KafkaListener(topics = { "payments-fail-on-error-dlt"}, groupId = "payments")

public void handlePayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on main topic={}, payload={}", topic, payment);

}

@DltHandler

public void handleDltPayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on dlt topic={}, payload={}", topic, payment);

}

Notably, the @KafkaListener consumer reads messages from the payments-fail-on-error-dlt topic.

Let’s verify that the event isn’t published to the DLT when the main consumer is successful:

@Test

public void whenMainConsumerSucceeds_thenNoDltMessage() throws Exception {

CountDownLatch mainTopicCountDownLatch = new CountDownLatch(1);

doAnswer(invocation -> {

mainTopicCountDownLatch.countDown();

return null;

}).when(paymentsConsumer)

.handlePayment(any(), any());

kafkaProducer.send(TOPIC, createPayment("dlt-fail-main"));

assertThat(mainTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isTrue();

verify(paymentsConsumer, never()).handleDltPayment(any(), any());

}

Let’s see what happens when both the main and the DLT consumers fail to process the event:

@Test

public void whenDltConsumerFails_thenDltProcessingStops() throws Exception {

CountDownLatch mainTopicCountDownLatch = new CountDownLatch(1);

CountDownLatch dlTTopicCountDownLatch = new CountDownLatch(2);

doAnswer(invocation -> {

mainTopicCountDownLatch.countDown();

throw new Exception("Simulating error in main consumer");

}).when(paymentsConsumer)

.handlePayment(any(), any());

doAnswer(invocation -> {

dlTTopicCountDownLatch.countDown();

throw new Exception("Simulating error in dlt consumer");

}).when(paymentsConsumer)

.handleDltPayment(any(), any());

kafkaProducer.send(TOPIC, createPayment("dlt-fail"));

assertThat(mainTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isTrue();

assertThat(dlTTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isFalse();

assertThat(dlTTopicCountDownLatch.getCount()).isEqualTo(1);

}

In the test above, the event was processed once by the main consumer and only once by the DLT consumer.

4.2. DLT Retry

We can configure the DLT consumer to attempt to reprocess the event when the DLT processing fails using the ALWAYS_RETRY_ON_ERROR strategy. This is the strategy used as default:

@RetryableTopic(

attempts = "1",

kafkaTemplate = "retryableTopicKafkaTemplate",

dltStrategy = DltStrategy.ALWAYS_RETRY_ON_ERROR)

@KafkaListener(topics = { "payments-retry-on-error-dlt"}, groupId = "payments")

public void handlePayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on main topic={}, payload={}", topic, payment);

}

@DltHandler

public void handleDltPayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on dlt topic={}, payload={}", topic, payment);

}

Notably, the @KafkaListener consumer reads messages from the payments-retry-on-error-dlt topic.

Next, let’s test what happens when the main and the DLT consumers fail to process the event:

@Test

public void whenDltConsumerFails_thenDltConsumerRetriesMessage() throws Exception {

CountDownLatch mainTopicCountDownLatch = new CountDownLatch(1);

CountDownLatch dlTTopicCountDownLatch = new CountDownLatch(3);

doAnswer(invocation -> {

mainTopicCountDownLatch.countDown();

throw new Exception("Simulating error in main consumer");

}).when(paymentsConsumer)

.handlePayment(any(), any());

doAnswer(invocation -> {

dlTTopicCountDownLatch.countDown();

throw new Exception("Simulating error in dlt consumer");

}).when(paymentsConsumer)

.handleDltPayment(any(), any());

kafkaProducer.send(TOPIC, createPayment("dlt-retry"));

assertThat(mainTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isTrue();

assertThat(dlTTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isTrue();

assertThat(dlTTopicCountDownLatch.getCount()).isEqualTo(0);

}

As expected, the DLT consumer tries to reprocess the event.

4.3. Disabling DLT

The DLT mechanism can also be turned off using the NO_DLT strategy:

@RetryableTopic(

attempts = "1",

kafkaTemplate = "retryableTopicKafkaTemplate",

dltStrategy = DltStrategy.NO_DLT)

@KafkaListener(topics = { "payments-no-dlt" }, groupId = "payments")

public void handlePayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on main topic={}, payload={}", topic, payment);

}

@DltHandler

public void handleDltPayment(

Payment payment, @Header(KafkaHeaders.RECEIVED_TOPIC) String topic) {

log.info("Event on dlt topic={}, payload={}", topic, payment);

}

Notably, the @KafkaListener consumer reads messages from the payments-no-dlt topic.

Let’s check that an event isn’t forwarded to the DLT when the consumer on the main topic fails to process it:

@Test

public void whenMainConsumerFails_thenDltConsumerDoesNotReceiveMessage() throws Exception {

CountDownLatch mainTopicCountDownLatch = new CountDownLatch(1);

CountDownLatch dlTTopicCountDownLatch = new CountDownLatch(1);

doAnswer(invocation -> {

mainTopicCountDownLatch.countDown();

throw new Exception("Simulating error in main consumer");

}).when(paymentsConsumer)

.handlePayment(any(), any());

doAnswer(invocation -> {

dlTTopicCountDownLatch.countDown();

return null;

}).when(paymentsConsumer)

.handleDltPayment(any(), any());

kafkaProducer.send(TOPIC, createPayment("no-dlt"));

assertThat(mainTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isTrue();

assertThat(dlTTopicCountDownLatch.await(5, TimeUnit.SECONDS)).isFalse();

assertThat(dlTTopicCountDownLatch.getCount()).isEqualTo(1);

}

As expected, the event isn’t forwarded to the DLT, although we’ve implemented a consumer annotated with @DltHandler.

5. Conclusion

In this article, we learned three different DLT strategies. The first one is the FAIL_ON_ERROR strategy when the DLT consumer won’t try to reprocess an event in case of failure. In contrast, the ALWAYS_RETRY_ON_ERROR strategy ensures that the DLT consumer tries to reprocess the event in case of failure. This is the value used as default when no other strategy is explicitly set. The last one is the NO_DLT strategy, which turns off the DLT mechanism altogether.

As always, the complete code can be found over on GitHub.