1. Overview

In this tutorial, we’ll learn what Cognitive Complexity is and how to calculate this metric. We’ll go step-by-step through different patterns and structures that are increasing the cognitive complexity of a function. We’ll delve into these elements, including loops, conditional statements, jump-to labels, recursion, nesting, and more.

Following that, we’ll discuss the detrimental effects that cognitive complexity can have on code maintainability. Finally, we’ll explore a few refactoring techniques that can help us mitigate these negative effects.

2. Cyclomatic vs. Cognitive Complexity

For some time, cyclomatic complexity was the only way of measuring code complexity. As a result, a new metric emerged that allows us to measure the complexity of a piece of code with more accuracy. While it provides a decent overall assessment, it does overlook some important aspects that make code harder to understand.

2.1. Cyclomatic Complexity

Cyclomatic complexity stands as one of the first metrics that allowed code complexity measurement. It was conceptualized by Thomas J. McCabe in 1976, defining the cyclomatic complexity of a function as the number of all the autonomous paths within the respective piece of code.

For example, a switch statement that creates five different branches will result in a cyclomatic complexity of five:

public String tennisScore(int pointsWon) {

switch (pointsWon) {

case 0: return "Love"; // +1

case 1: return "Fifteen"; // +1

case 2: return "Thirty"; // +1

case 3: return "Forty"; // +1

default: throw new IllegalArgumentException(); // +1

}

} // cyclomatic complexity = 5

While we can use this metric to quantify the number of distinct paths in our code, we cannot precisely compare the complexity of different functions. It overlooks crucial aspects, such as multiple nesting levels, jump-to labels like break or continue, recursion, complex boolean operations, and other factors that fail to be appropriately penalized.

As a result, we’ll end up with functions that are objectively harder to understand and maintain, but their cyclomatic complexity is not necessarily larger. For instance, the cyclomatic complexity of countVowels also measures five:

public int countVowels(String word) {

int count = 0;

for (String c : word.split("")) { // +1

for(String v: vowels) { // +1

if(c.equalsIgnoreCase(v)) { // +1

count++;

}

}

}

if(count == 0) { // +1

return "does not contain vowels";

}

return "contains %s vowels".formatted(count); // +1

} // cyclomatic complexity = 5

2.2. Cognitive Complexity

As a result, the cognitive complexity metric was developed by Sonar with the primary goal of providing a reliable measure of code understandability. Its underlying motivation was to promote the practice of refactoring towards good code quality and readability.

Even though we can configure static code analyzers such as SonarQube to calculate the cognitive complexity of our code automatically, let’s understand how the cognitive complexity score is calculated and what main principles are considered.

First, there’s no penalty for structures that will shorthand the code, making it more readable. For example, we can imagine extracting a function or introducing an early return to decrease the nesting level of the code.

Secondly, the cognitive complexity increases for each break in the linear flow. Loops, conditional statements, try-catch blocks, and other similar structures are breaking this linear flow, and therefore, they will increase the complexity level by one. The goal is to read all the code in a linear flow, from top to bottom and left to right.

Lastly, nesting will cause additional complexity penalties. As a result, if we look back at our previous code examples, the tennisScore function that was using the switch statement will have a cognitive complexity of one. On the other hand, the countVowels function will be heavily penalized for the nested loops, resulting in a complexity level of seven:

public String countVowels(String word) {

int count = 0;

for (String c : word.split("")) { // +1

for(String v: vowels) { // +2 (nesting level = 1)

if(c.equalsIgnoreCase(v)) { // +3 (nesting level = 2)

count++;

}

}

}

if(count == 0) { // +1

return "does not contain vowels";

}

return "contains %s vowels".formatted(count);

} // cognitive complexity = 7

3. Breaks in the Linear Flow

As mentioned in the previous section, we should be able to read code with minimal cognitive complexity smoothly and uninterruptedly from start to finish. However, some elements disrupting the natural flow of the code, therefore, will be penalized, increasing the complexity level. This will be the case for the following structures:

- statements: if, ternary operators, switch

- loops: for, while, do while

- try-catch blocks

- recursion

- jump-to labels: continue, break

- sequences of logical operators

Now, let’s look at a simple method example and try to find these structures that are making the code less readable:

public String readFile(String path) {

// +1 for the if; +2 for the logical operator sequences ("or" and "not")

String text = null;

if(path == null || path.trim().isEmpty() || !path.endsWith(".txt")) {

return DEFAULT_TEXT;

}

try {

text = "";

// +1 for the loop

for (String line: Files.readAllLines(Path.of(path))) {

// +1 for the if statement

if(line.trim().isEmpty()) {

// +1 for the jump-to label

continue OUT;

}

text+= line;

}

} catch (IOException e) { // +1 for the catch block

// +1 for if statement

if(e instanceof FileNotFoundException) {

log.error("could not read the file, returning the default content..", e);

} else {

throw new RuntimeException(e);

}

}

// +1 for the ternary operator

return text == null ? DEFAULT_TEXT : text;

}

As it stands, the method’s current structure does not allow for a seamless linear flow. The flow-breaking structures we discussed will increment the cognitive complexity level by nine.

4. Nested Flow-Breaking Structures

With each additional level of nested scopes, the readability of the code diminishes. As a result, each subsequent nested level of if, else, catch, switch, loops, and lambda expressions will contribute to an additional +1 increment in cognitive complexity. If we revisit the previous example, we’ll find two places where deep nesting can lead to additional penalties in the complexity score:

public String readFile(String path) {

String text = null;

if(path == null || path.trim().isEmpty() || !path.endsWith(".txt")) {

return DEFAULT_TEXT;

}

try {

text = "";

// nesting level is 1

for (String line: Files.readAllLines(Path.of(path))) {

// nesting level is 2 => complexity +1

if(line.trim().isEmpty()) {

continue OUT;

}

text+= line;

}

// nesting level is 1

} catch (IOException e) {

// nesting level is 2 => complexity +1

if(e instanceof FileNotFoundException) {

log.error("could not read the file, returning the default content..", e);

} else {

throw new RuntimeException(e);

}

}

return text == null ? DEFAULT_TEXT : text;

}

Consequently, this method exhibits a cognitive complexity of eleven, accurately representing its difficulty in terms of readability and comprehension. However, through refactoring, we can significantly reduce its cognitive complexity and enhance its overall readability. We will delve into the specifics of this refactoring process in the next section.

5. Refactoring

There exists a range of refactoring techniques at our disposal to reduce the cognitive complexity of our code. Let us explore each while highlighting how our IDE can facilitate their safe and efficient execution.

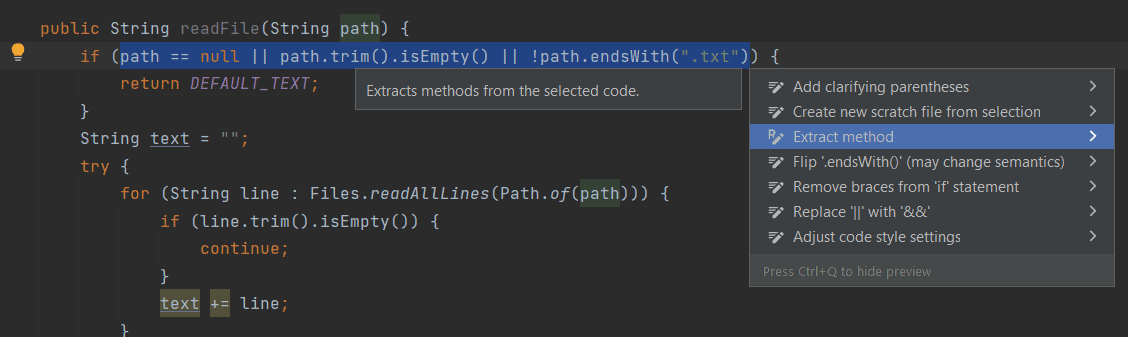

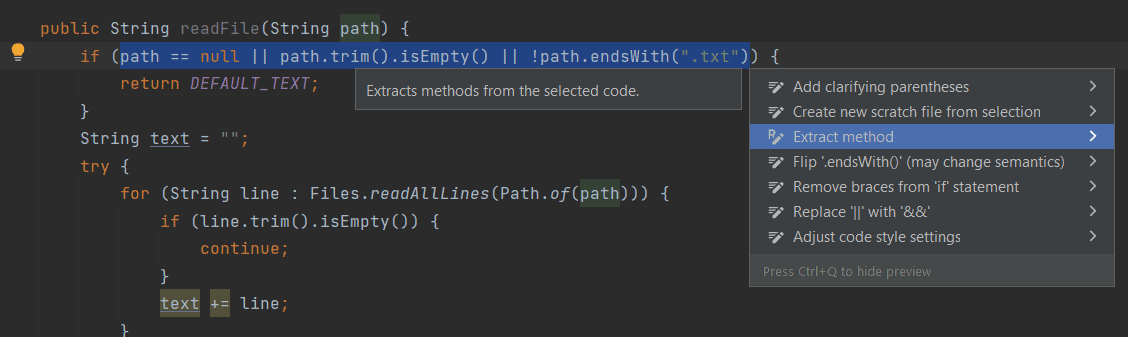

One effective approach involves extracting methods or classes, as it allows us to condense the code without incurring penalties. In this case, we can utilize method extraction to validate the filePath argument, enhancing the overall clarity.

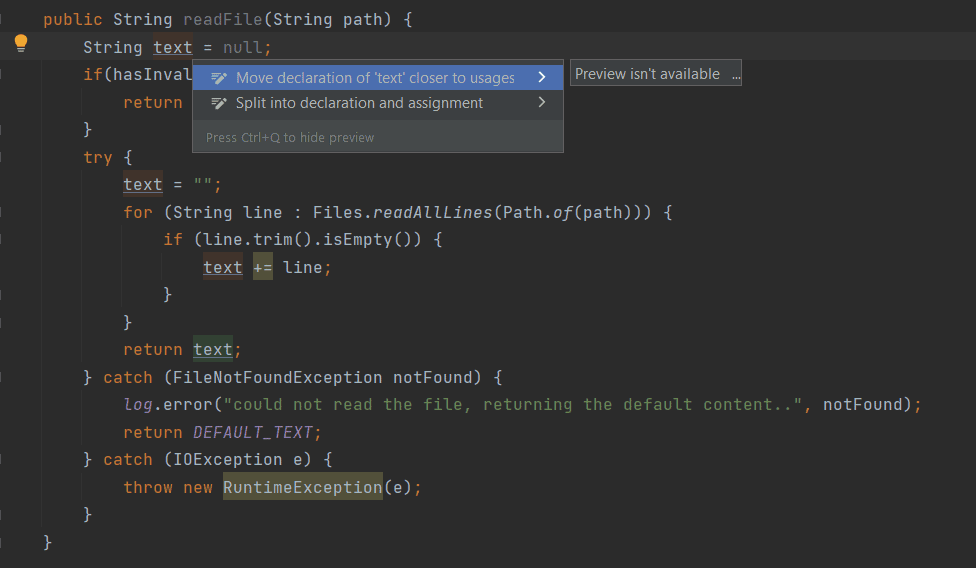

Most IDEs will allow you to automatically do this using a simple shortcut or refactoring menu. For instance, in IntelliJ, we can extract the hasInvalidPath method by highlighting the respective line and utilizing the Ctrl+Alt+M (or Ctrl+Enter) shortcuts:

private boolean hasInvalidPath(String path) {

return path == null || path.trim().isEmpty() || !path.endsWith(".txt");

}

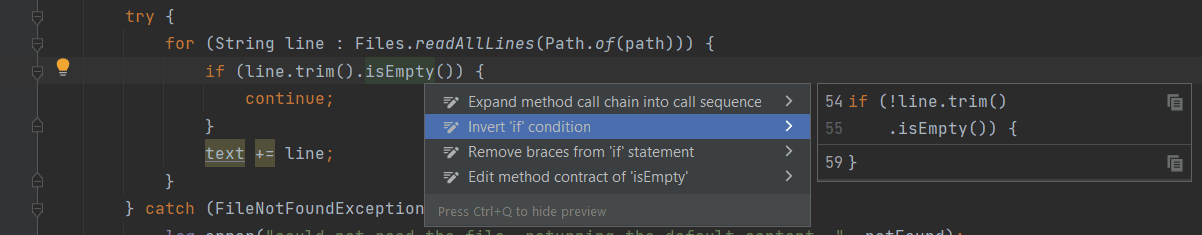

5.2. Invert Conditions

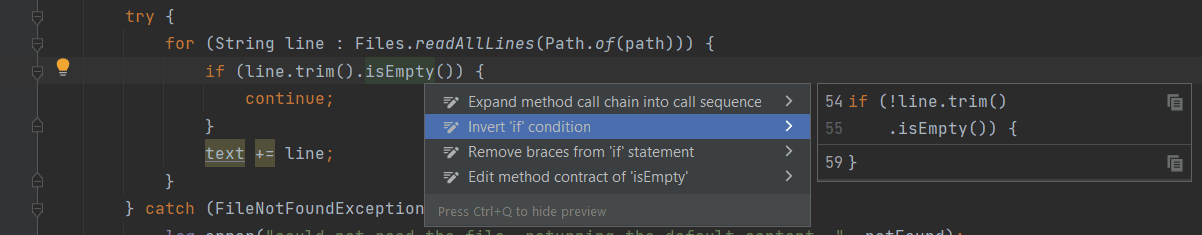

Depending on the context, sometimes, inversing simple if statements can be a convenient way of decreasing the nesting level of the code. In our example, we can invert the if statement, checking if the row is empty and avoiding the continue keyword. Yet again, the IDE can come in handy for this simple refactoring: in Intellij, we’ll need to highlight the if and hit Alt+Enter:

5.3. Language Features

We should also leverage language features to avoid flow-breaking structures whenever possible. For example, we can use multiple catch blocks to handle exceptions differently. This will help us prevent an extra if statement that would increase the nesting level.

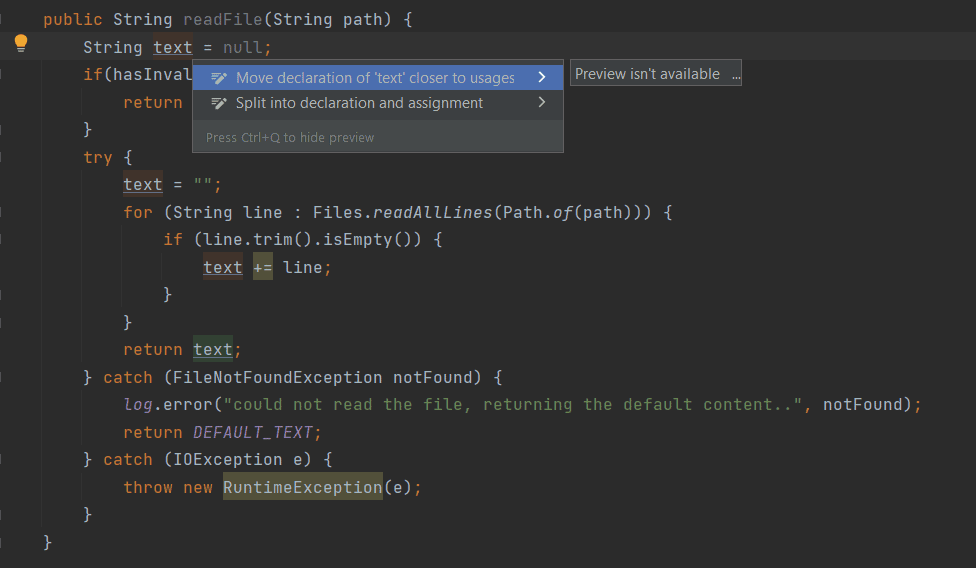

5.4. Early Returns

Early returns can also make the method shorter and easier to understand. In this case, an early return can help us deal with the ternary operator at the end of the function.

As we can notice, we have an opportunity to introduce an early return for the text variable and handle the occurrence of a FileNotFoundException by returning DEFAULT_TEXT. Consequently, we can improve the code by reducing the scope of the text variable, which can be accomplished by moving its declaration closer to its usage (Alt+M in IntelliJ).

This adjustment enhances the code’s organization and avoids the use of null:

5.5. Declarative Code

Lastly, declarative patterns usually decrease the code’s nesting levels and complexity. For example, Java Streams can help us make the code more compact and comprehensive. Let’s use Files.lines() — which return a Stream<String> — instead of File.readAllLines(). Additionally, we can check if the file exists right after the initial path validation because they use the same return value.

The resulting code will only have two penalties for the if statement and the logical operation performing the initial argument validation:

public String readFile(String path) {

// +1 for the if statement; +1 for the logical operation

if(hasInvalidPath(path) || fileDoesNotExist(path)) {

return DEFAULT_TEXT;

}

try {

return Files.lines(Path.of(path))

.filter(not(line -> line.trim().isEmpty()))

.collect(Collectors.joining(""));

} catch (IOException e) {

throw new RuntimeException(e);

}

}

6. Conclusion

Sonar developed the cognitive complexity metric because of the need for a precise means of assessing code readability and maintainability. In this article, we covered the process of calculating the cognitive complexity of a function.

After that, we examined the structures that disrupt the linear flow of the code. Finally, we discussed various techniques of refactoring code that allowed us to decrease the cognitive complexity of a function. We’ve used the IDE features to refactor a function and reduce its complexity score from eleven to two.